-

Pl

chevron_right

Erlang Solutions: Optimising for Concurrency: Comparing and contrasting the BEAM and JVM virtual machines

news.movim.eu / PlanetJabber • 29 November, 2024 • 17 minutes

The success of any programming language in the Erlang ecosystem can be apportioned into three tightly coupled components. They are the semantics of the Erlang programming language , (on top of which other languages are implemented), the OTP libraries and middleware (used to architect scalable and resilient concurrent systems) and the BEAM Virtual Machine tightly coupled to the language semantics and OTP.

Take any of these components on their own, and you have a runner-up. But, put the three together, and you have the uncontested winner for scalable, resilient soft-real real-time systems. To quote Joe Armstrong, “You can copy the Erlang libraries, but if it does not run on BEAM, you can’t emulate the semantics”. This is enforced by Robert Virding’s First Rule of Programming, which states that “Any sufficiently complicated concurrent program in another language contains an ad hoc informally-specified bug-ridden slow implementation of half of Erlang.”

In this post, we want to explore the BEAM VM internals. We will compare and contrast them with the JVM where applicable, highlighting why you should pay attention to them and care. For too long, this component has been treated as a black box and taken for granted, without understanding the reasons or implications. It is time to change that!

Highlights of the BEAM

Erlang and the BEAM VM were invented to be the right tools to solve a specific problem. Ericsson developed them to help implement telecom infrastructure, handling both mobile and fixed networks. This infrastructure is highly concurrent and scalable in nature. It has to display soft real-time properties and may never fail. We don’t want our phone calls dropped or our online gaming experience to be affected by system upgrades, high user load or software, hardware and network outages. The BEAM VM solves these challenges using a state-of-the-art concurrent programming model. It features lightweight BEAM processes which don’t share memory, are managed by the schedulers of the BEAM which can manage millions of them across multiple cores, and garbage collectors running on a per-process basis, highly optimised to reduce any impact on other processes. The BEAM is also the only widely used VM used at scale with a built-in distribution model which allows a program to run on multiple machines transparently.

The BEAM VM supports zero-downtime upgrades with hot code replacement , a way to modify application code at runtime. It is probably the most cited unique feature of the BEAM. Hot code loading means that the application logic can be updated by changing the runnable code in the system whilst retaining the internal process state. This is achieved by replacing the loaded BEAM files and instructing the VM to replace the references of the code in the running processes.

It is a crucial feature for no downtime code upgrades for telecom infrastructure, where redundant hardware was put to use to handle spikes. Nowadays, in the era of containerisation, other techniques are also used for production updates. Those who have never used it dismiss it as a less important feature, but it is nonetheless useful in the development workflow. Developers can iterate faster by replacing part of their code without having to restart the system to test it. Even if the application is not designed to be upgradable in production, this can reduce the time needed for recompilation and redeployments.

Highlights of the JVM

The Java Virtual Machine (JVM) was invented by Sun Microsystem with the intent to provide a platform for ‘write once’ code that runs everywhere. They created an object-oriented language similar to C++ , but memory-safe because its runtime error detection checks array bounds and pointer dereferences. The JVM ecosystem became extremely popular in the Internet era, making it the de-facto standard for enterprise server applications. The wide range of applicability was enabled by a virtual machine that caters for many use cases, and an impressive set of libraries supporting enterprise development.

The JVM was designed with efficiency in mind. Most of its concepts are abstractions of features found in popular operating systems such as the threading model which maps the VM threads to operating system threads. The JVM is highly customisable, including the garbage collector (GC) and class loaders. Some state-of-the-art GC implementations provide highly tunable features catering for a programming model based on shared memory. And, the JIT (Just-in-time) compiler automatically compiles bytecode to native machine code with the intent to speed up parts of the application.

The JVM allows you to change the code while the program is running. It is a very useful feature for debugging purposes, but production use of this feature is not recommended due to serious limitations .

Concurrency and Parallelism

We talk about parallel code execution if parts of the code are run at the same time on multiple cores, processors or computers, while concurrent programming refers to handling events arriving at the system independently. Concurrent execution can be simulated on single-core hardware, while parallel execution cannot. Although this distinction may seem pedantic, the difference results in some very different problems to solve. Think of many cooks making a plate of carbonara pasta. In the parallel approach, the tasks are split across the number of cooks available, and a single portion would be completed as quickly as it took these cooks to complete their specific tasks. In a concurrent world, you would get a portion for every cook, where each cook does all of the tasks. You use parallelism for speed and concurrency for scale.

Parallel execution tries to decompose the problem into parts that are independent of each other. Boil the water, get the pasta, mix the egg, fry the guanciale ham, and grate the pecorino cheese 1 . The shared data (or in our example, the serving dish) is handled by locks, mutexes and various other techniques to guarantee correctness. Another way to look at this is that the data (or ingredients) are present, and we want to utilise as many parallel CPU resources as possible to finish the job as quickly as possible.

Concurrent programming, on the other hand, deals with many events that arrive at the system at different times and tries to process all of them within a reasonable timeframe. On multi-core or distributed architectures, some of the processing may run in parallel. Another way to look at it is that the same cook boils the water, gets the pasta, mixes the eggs and so on, following a sequential algorithm which is always the same. What changes across processes (or cooks) is the data (or ingredients) to work on, which exist in multiple instances.

In summary, concurrency and parallelism are two intrinsically different problems, requiring different solutions.

Concurrency the Java way

In Java, concurrent execution is implemented using VM threads. Before the latest developments, only one threading model, called Platform Threads existed. As it is a thin abstraction layer above operating system threads, Platform Threads are scheduled in a rather simple, priority-based way, leaving most of the work to the underlying operating system. With Java 21, a new threading model was introduced, the Virtual Threads. This new model is very similar to BEAM processes since virtual threads are scheduled by the JVM, providing better performance in applications where thread contention is not negligible. Scheduling works by mounting a virtual thread to the carrier (OS) thread and unmounting it when the state of the virtual thread becomes blocked, and replacing it with a new virtual thread from the pool.

Since Java promotes the use of shared data structures, both threading models suffer from performance bottlenecks caused by synchronisation-related issues like frequent CPU cache invalidation and locking errors. Also, programming with concurrency primitives is a difficult task because of the challenges created by the shared memory model. To overcome these difficulties, there are attempts to simplify and unify the concurrent programming models, with the most successful attempt being the Akka framework . Unfortunately, it is not widely used, limiting its usefulness as a unified concurrency model, even for enterprise-grade applications. While Akka does a great job at replicating the higher-level constructs, it is somewhat limited by the lack of primitives provided by the JVM, allowing it to be highly optimised for concurrency. While the primitives of the JVM enable a wider range of use cases, they make programming distributed systems harder as they have no built-in primitives for communication and are often based on a shared memory model. For example, wherein a distributed system do you place your shared memory? And what is the cost of accessing it?

Garbage Collection

Garbage collection is a critical task for most of the applications, but applications may have very different performance requirements. Since the JVM is designed to be a ubiquitous platform, it is evident that there is no one-size-fits-all solution. There are garbage collectors designed for resource-limited environments such as embedded devices, and also for resource-intensive, highly concurrent or even real-time applications. The JVM GC interface makes it possible to use 3rd party collectors as well.

Due to the Java Memory Model , concurrent garbage collection is a hard task. The JVM needs to keep track of the memory areas that are shared between multiple threads, the access patterns to the shared memory, thread states, locks and so on. Because of shared memory, collections affect multiple threads simultaneously, making it difficult to predict the performance impact of GC operations. So difficult, that there is an entire industry built to provide tools and expertise for GC optimisation.

The BEAM and Concurrency

Some say that the JVM is built for parallelism, the BEAM for concurrency. While this might be an oversimplification, its concurrency model makes the BEAM more performant in cases where thousands or even millions of concurrent tasks should be processed in a reasonable timeframe.

The BEAM provides lightweight processes to give context to the running code. BEAM processes are different from operating system processes, but they share many concepts. BEAM processes, also called actors, don’t share memory, but communicate through message passing, copying data from one process to another. Message passing is a feature that the virtual machine implements through mailboxes owned by individual processes. It is a non-blocking operation, which means that sending a message to another process is almost instantaneous and the execution of the sender is not blocked during the operation. The messages sent are in the form of immutable data, copied from the stack of the sending process to the mailbox of the receiving one. There are no shared data structures, so this can be achieved without the need for locks and mutexes among the communicating processes, only a lock on the mailbox in case multiple processes send a message to the same recipient in parallel.

Immutable data and message passing together enable the programmer to write processes which work independently of each other and focus on functionality instead of the low-level management of the memory and scheduling of tasks. It turns out that this simple design is effective on both single thread and multiple threads on a local machine running in the same VM and, using the inter-VM communication facilities of the BEAM, across the network and machines running the BEAM. Because the messages are immutable between processes, they can be scheduled to run on another OS thread (or machine) without locking, providing almost linear scaling on distributed, multi-core architectures. The processes are handled in the same way on a local VM as in a cluster of VMs, message sending works transparently regardless of the location of the receiving process.

Processes do not share memory, allowing data replication for resilience and distribution for scale. Having two instances of the same process on a single or more separate machine, state updates can be shared with each other. If one of the processes or machines fails, the other has an up-to-date copy of the data and can continue handling requests without interruption, making the system fault-tolerant. If more than one machine is operational, all the processes can handle requests, giving you scalability. The BEAM provides highly optimised primitives for all of this to work seamlessly, while OTP (the “standard library”) provides the higher level constructs to make the life of the programmers easy.

Scheduler

We mentioned that one of the strongest features of the BEAM is the ability to run concurrent tasks in lightweight processes. Managing these processes is the task of the scheduler.

The scheduler starts, by default, an OS thread for every core and optimises the workload between them. Each process consists of code to be executed and a state which changes over time. The scheduler picks the first process in the run queue that is ready to run, and gives it a certain amount of reductions to execute, where each reduction is the rough equivalent of a BEAM command. Once the process has either run out of reductions, is blocked by I/O, is waiting for a message, or is completed executing its code, the scheduler picks the next process from the run queue and dispatches it. This scheduling technique is called pre-emptive.

We have mentioned the Akka framework many times. Its biggest drawback is the need to annotate the code with scheduling points, as the scheduling is not done at the JVM level. By removing the control from the hands of the programmer, soft real-time properties are preserved and guaranteed, as there is no risk of them accidentally causing process starvation.

The processes can be spread around the available scheduler threads to maximise CPU utilisation. There are many ways to tweak the scheduler but it is rarely needed, only for edge cases, as the default configuration covers most usage patterns.

There is a sensitive topic that frequently pops up regarding schedulers: how to handle Natively Implemented Functions (NIFs) . A NIF is a code snippet written in C, compiled as a library and run in the same memory space as the BEAM for speed. The problem with NIFs is that they are not pre-emptive, and can affect the schedulers. In recent BEAM versions, a new feature, dirty schedulers, was added to give better control for NIFs. Dirty schedulers are separate schedulers that run in different threads to minimise the interruption a NIF can cause in a system. The word dirty refers to the nature of the code that is run by these schedulers.

Garbage Collector

Modern, high-level programming languages today mostly use a garbage collector for memory management. The BEAM languages are no exception. Trusting the virtual machine to handle the resources and manage the memory is very handy when you want to write high-level concurrent code, as it simplifies the task. The underlying implementation of the garbage collector is fairly straightforward and efficient, thanks to the memory model based on an immutable state. Data is copied, not mutated and the fact that processes do not share memory removes any process inter-dependencies, which, as a result, do not need to be managed.

Another feature of the BEAM is that garbage collection is run only when needed, on a per-process basis, without affecting other processes waiting in the run queue. As a result, the garbage collection in Erlang does not ‘stop the world’. It prevents processing latency spikes because the VM is never stopped as a whole – only specific processes are, and never all of them at the same time. In practice, it is just part of what a process does and is treated as another reduction. The garbage collector collecting process suspends the process for a very short interval, often microseconds. As a result, there will be many small bursts, triggered only when the process needs more memory. A single process usually doesn’t allocate large amounts of memory, and is often short-lived, further reducing the impact by immediately freeing up all its allocated memory on termination.

More about features

The features of the garbage collector are discussed in an excellent blog post by Lukas Larsson . There are many intricate details, but it is optimised to handle immutable data in an efficient way, dividing the data between the stack and the heap for each process. The best approach is to do the majority of the work in short-lived processes.

A question that often comes up on this topic is how much memory the BEAM uses. Under the hood, the VM allocates big chunks of memory and uses custom allocators to store the data efficiently and minimise the overhead of system calls.

This has two visible effects: The used memory decreases gradually after the space is not needed, and reallocating huge amounts of data might mean doubling the current working memory. The first effect can, if necessary, be mitigated by tweaking the allocator strategies. The second one is easy to monitor and plan for if you have visibility of the different types of memory usage. (One such monitoring tool that provides system metrics that are out of the box is WombatOAM ).

JVM vs BEAM concurrency

As mentioned before, the JVM and the BEAM handle concurrent tasks very differently. Under high load, shared resources become bottlenecks. In a Java application, we usually can’t avoid that. That’s why the BEAM is superior in these kinds of applications. While memory copy has a certain cost, the performance impact caused by the synchronised access to shared resources is much higher. We performed many tests to measure this impact.

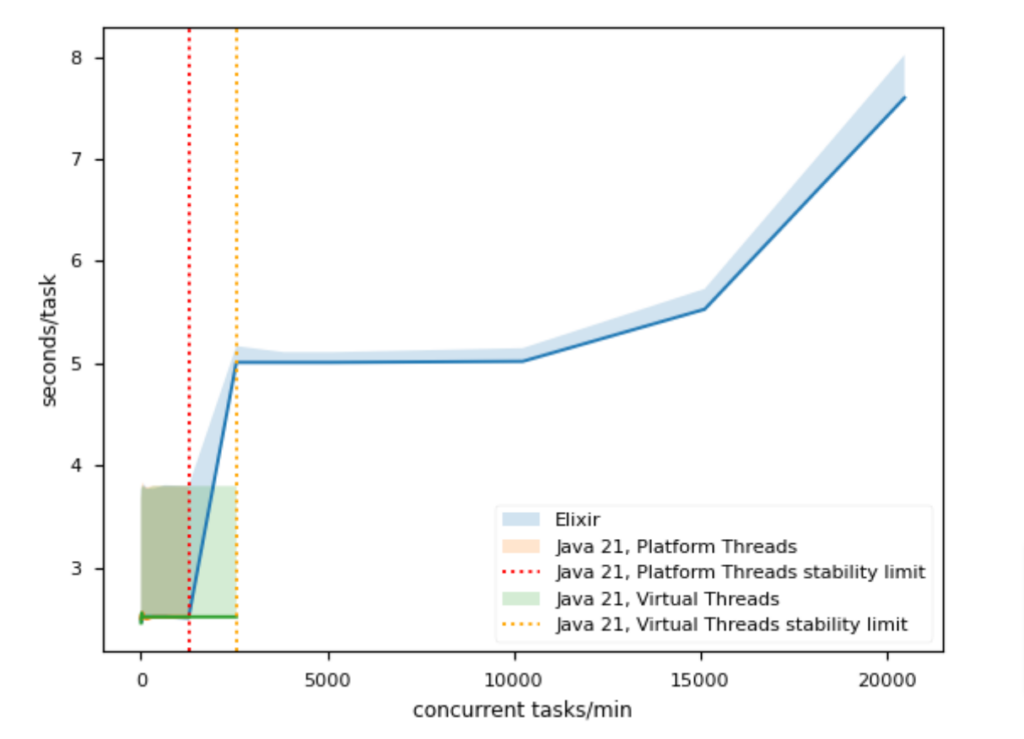

This chart nicely displays the large differences in performance between the JVM and the BEAM. In this test, the applications were implemented in Elixir and Java. The Elixir code compiles to the BEAM, while the Java code, evidently, compiles to the JVM.

When not to use the BEAM

It is very much about the right tool for the job. Do you need a system to be extremely fast, but are not concerned about concurrency? Handling a few events in parallel, and having to handle them fast? Need to crunch numbers for graphics, AI or analytics? Go down the C++, Python or Java route. Telecom infrastructure does not need fast operations on floats, so speed was never a priority. Aided with dynamic typing, which has to do all type checks at runtime means compile-time optimizations are not as trivial. So number crunching is best left to the JVM, Go or other languages which compile to native code. It is no surprise that floating point operations on Erjang, the version of Erlang running on the JVM, was 5000% faster than the BEAM. But where we’ve seen the BEAM shine is using its concurrency to orchestrate number crunching, outsourcing the analytics to C, Julia, Python or Rust. You do the map outside the BEAM and the reduction within the BEAM.

The mantra has always been fast enough. It takes a few hundred milliseconds for humans to perceive a stimulus (an event) and process it in their brains, meaning that micro or nano-second response time is not necessary for many applications. Nor would you use the BEAM for microcontrollers, it is too resource-hungry. But for embedded systems with a bit more processing power, where multi-core is becoming the norm and you need concurrency, the BEAM shines. Back in the 90s, we were implementing telephony switches handling tens of thousands of subscribers running in embedded boards with 16 MB of memory. How much memory does a Raspberry Pi have these days? And finally, hard real-time systems. You would probably not want the BEAM to manage your airbag control system. You need hard guarantees, something only a hard real-time OS and a language with no garbage collection or exceptions. An implementation of an Erlang VM running on bare metal such as GRiSP will give you similar guarantees.

Conclusion

Use the right tool for the job. If you are writing a soft real-time system which has to scale out of the box, should never fail, and do so without the hassle of having to reinvent the wheel, the BEAM is the battle-proven technology you are looking for.

For many, it works as a black box. Not knowing how it works would be analogous to driving a Ferrari and not being capable of achieving optimal performance or not understanding what part of the motor that strange sound is coming from. This is why you should learn more about the BEAM, understand its internals and be ready to fine-tune and fix it.

For those who have used Erlang and Elixir in anger, we have launched a one-day instructor-led course which will demystify and explain a lot of what you saw whilst preparing you to handle massive concurrency at scale. The course is available through our new instructor-led lead remote training, learn more here . We also recommend The BEAM book by Erik Stenman and the BEAM Wisdoms , a collection of articles by Dmytro Lytovchenko.

If you’d like to speak to a member of the team, feel free to drop us a message .

The post Optimising for Concurrency: Comparing and contrasting the BEAM and JVM virtual machines appeared first on Erlang Solutions .