Traditionally, the fintech industry relied on

proprietary software

, with usage and distribution restricted by paid licences. Fintech open-source technologies were distrusted due to security concerns over visible code in complex systems.

But fast-forward to today and financial institutions, including neobanks like Revolut and Monzo, have embraced open source solutions. These banks have built technology stacks on open-source platforms, using new software and innovation to strengthen their competitive edge.

While proprietary software has its role, it faces challenges exemplified by

Oracle/Java’s subscription

model changes, which have led to significant cost hikes. In contrast, open source Delivers flexibility, scalability, and more control, making it a great choice for fintechs aiming to remain adaptable.

Curious why open source is the smart choice for fintech? Let’s look into how this shift can help future-proof operations, drive innovation, and enhance customer-centric services.

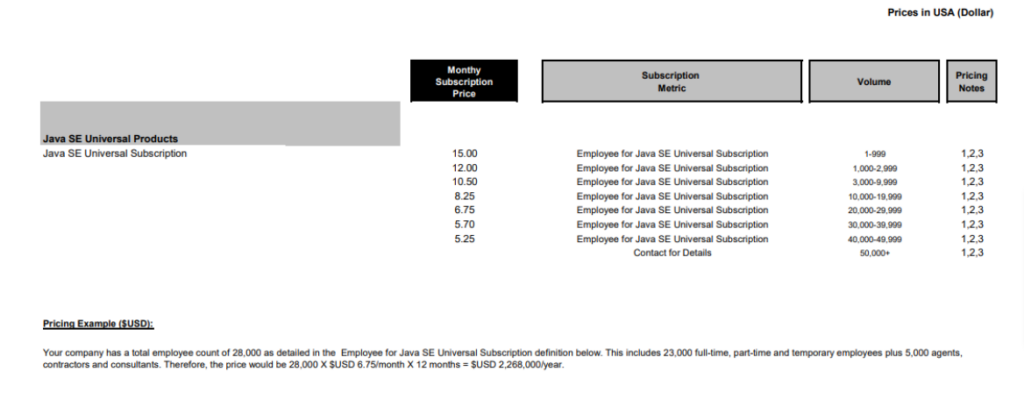

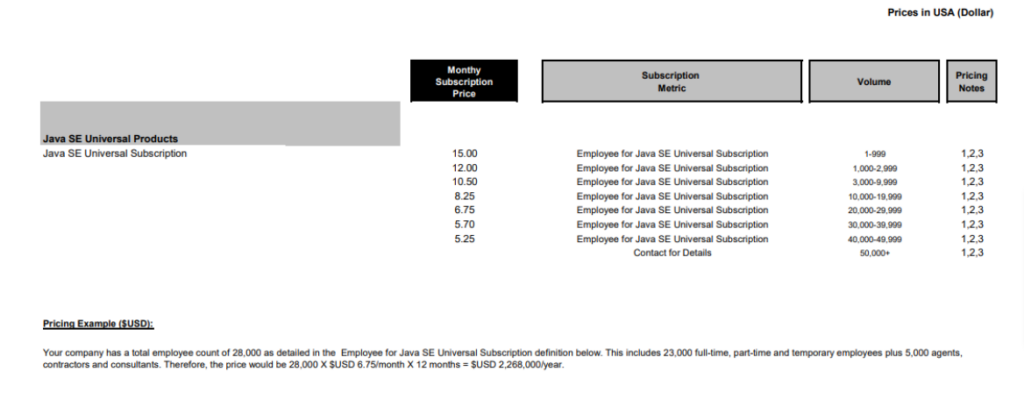

The impact of Oracle Java’s pricing changes

Before we understand why open source is a smart choice for fintech, let’s look at a recent example that highlights the risks of relying on proprietary software—Oracle Java’s subscription model changes.

A change to subscription

Java, known as the “language of business,” has been the top choice for developers and 90% of Fortune 500 companies for over 28 years, due to its stability, performance, and strong Oracle Java community.

In January 2023, Oracle quietly shifted its Java SE subscription model to an employee-based system, charging businesses based on total headcount, not just the number of users. This change alarmed many subscribers and resulted in steep increases in licensing fees. According to

Gartner

, these changes made operations two to five times more expensive for most organisations.

Oracle Java SE Universal Subscription Global Price List (by volume)

Impact on Oracle Java SE user base

By January 2024, many Oracle Java SE subscribers had switched to OpenJDK, the open-source version of Java. Online sentiment towards Oracle has been unfavourable, with many users expressing dissatisfaction in forums. Those who stuck with Oracle are now facing hefty subscription fee increases with little added benefit.

Lessons from Oracle Java SE

For fintech companies, Oracle Java’s pricing changes have highlighted the risks of proprietary software. In particular, there are unexpected cost hikes, less flexibility, and disruptions to critical infrastructure. Open source solutions, on the other hand, give fintech firms more control, reduce vendor lock-in, and allow them to adapt to future changes while keeping costs in check.

The advantages of open source technologies for Fintech

Open source software is gaining attention in financial institutions, thanks to the rise of digital financial services and fintech advancements.

It is expected to

grow by 24% by 2025

and companies that embrace open-source benefit from enhanced security, support for cryptocurrency trading, and a boost to fintech innovation.

Cost-effectiveness

The cost advantages of open-source software have been a major draw for companies looking to shift from proprietary systems. For fintech companies, open-source reduces operational expenses compared to the unpredictable, high costs of proprietary solutions like Oracle Java SE.

Open source software is often free, allowing fintech startups and established firms to lower development costs and redirect funds to key areas such as compliance, security, and user experience. It also avoids fees like:

-

Multi-user licences

-

Administrative charges

-

Ongoing annual software support charges

These savings help reduce operating expenses while enabling investment in valuable services like user training, ongoing support, and customised development, driving growth and efficiency.

A solution to big tech monopolies

Monopolies in tech, particularly in fintech, are increasing. As reported by

CB Insights

, about 80% of global payment transactions are controlled by just a few major players. These monopolies stifle innovation and drive up costs.

Open-source software decentralises development, preventing any single entity from holding total control. It offers fintech companies an alternative to proprietary systems, reducing reliance on monopolistic players and fostering healthy competition. Open-source models promote transparency, innovation, and lower costs, helping create more inclusive and competitive systems.

Transparent and secure solutions

Security concerns have been a major roadblock that causes companies and startups to hesitate in adopting open-source software.

A common myth about open source is that its public code makes it insecure. But, open-source benefits from transparency, as it allows for continuous public scrutiny. Security flaws are discovered and addressed quickly by the community, unlike proprietary software, where vulnerabilities may remain hidden.

An example is

Vocalink

, which powers real-time global payment systems. Vocalink uses

Erlang

, an open-source language designed for high-availability systems, ensuring secure, scalable payment handling. The transparency of open source allows businesses to audit security, ensure compliance, and quickly implement fixes, leading to more secure fintech infrastructure.

Ongoing community support

Beyond security, open source benefits from vibrant communities of developers and users who share knowledge and collaborate to enhance software. This fosters innovation and accelerates development, allowing for faster adaptation to trends or market demands.

Since the code is open, fintech firms can build custom solutions, which can be contributed back to the community for others to use. The rapid pace of innovation within these communities helps keep the software relevant and adaptable.

Interoperability

Interoperability is a game-changer for open-source solutions in financial institutions, allowing for the seamless integration of diverse applications and systems- essential for financial services with complex tech stacks.

By adopting open standards (publicly accessible guidelines ensuring compatibility), financial institutions can eliminate costly manual integrations and enable plug-and-play functionality. This enhances agility, allowing institutions to adopt the best applications without being tied to a single vendor.

A notable example is

NatWest’s Backplane

, an open-source interoperability solution built on FDC3 standards. Backplane enables customers and fintechs to integrate their desktop apps with various banking and fintech applications, enhancing the financial desktop experience. This approach fosters innovation, saves time and resources, and creates a more flexible, customer-centric ecosystem.

Future-proofing for longevity

Open-source software

has long-term viability. Since the source code is accessible, even if the original team disbands, other organisations, developers or the community at large can maintain and update the software. This ensures the software remains usable and up-to-date, preventing reliance on unsupported tools.

Open Source powering Fintech trends

According to the latest

study by McKinsey and Company

, Artificial Intelligence (AI), machine learning (ML), blockchain technology, and hyper-personalisation will be among some of the key technologies driving financial services in the next decade.

Open-source platforms will play a key role in supporting and accelerating these developments, making them more accessible and innovative.

AI and fintech innovation

-

Cost-effective AI/ML

: Open-source AI frameworks like

TensorFlow

,

PyTorch

, and

Scikit

-learn enable startups to prototype and deploy AI models affordably, with the flexibility to scale as they grow. This democratisation of AI allows smaller players to compete with larger firms.

-

Fraud detection and personalisation

: AI-powered fraud detection and personalised services are central to fintech innovation. Open-source AI libraries help companies like Stripe and PayPal detect fraudulent transactions by analysing patterns, while AI enables dynamic pricing and custom loan offers based on user behaviour.

-

Efficient operations

: AI streamlines back-office tasks through automation, knowledge graphs, and natural language processing (NLP), improving fraud detection and overall operational efficiency.

-

Privacy-aware AI

: Emerging technologies like federated learning and encryption tools help keep sensitive data secure, for rapid AI innovation while ensuring privacy and compliance.

Blockchain and fintech

Open-source blockchain platforms allow fintech startups to innovate without the hefty cost of proprietary systems:

-

Open-source blockchain platforms

: Platforms like

Ethereum

, Bitcoin Core, and

Hyperledger

are decentralising finance, providing transparency, reducing reliance on intermediaries, and reshaping financial services.

-

Decentralised finance (DeFi)

: DeFi is projected to see an impressive rise, with P2P lending growing from $43.16 billion in 2018 to an estimated

$567.3 billion by 2026

. Platforms like

Uniswap

and

Aave

, built on Ethereum, are pioneering decentralised lending and asset management, offering an alternative to traditional banking. By 2023, Ethereum alone locked $23 billion in DeFi assets, proving its growing influence in the fintech space. Enterprise blockchain solutions: Open source frameworks like Hyperledger Fabric and Corda are enabling enterprises to develop private, permissioned blockchain solutions, enhancing security and scalability across industries, including finance.

Cost-effective innovation:

Startups leveraging open-source blockchain technologies can build innovative financial services while keeping costs low, helping them compete effectively with traditional financial institutions.

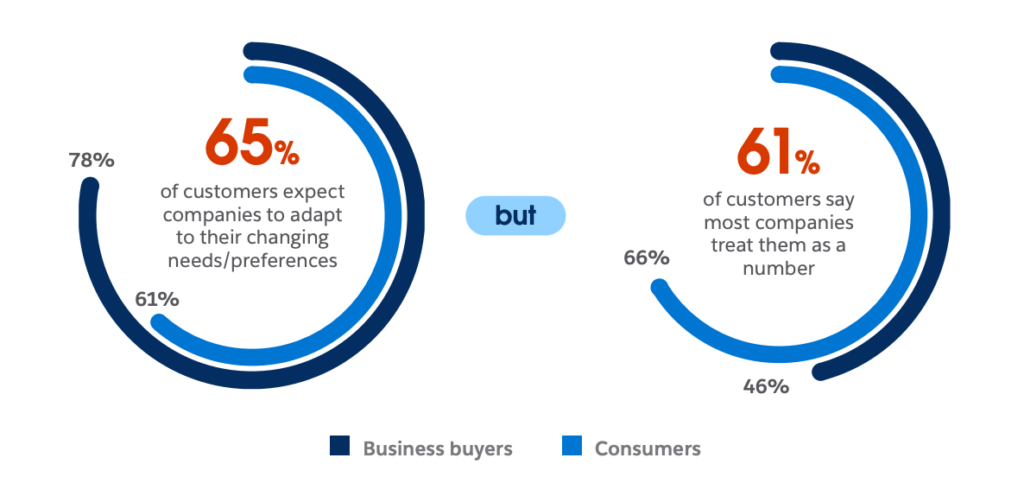

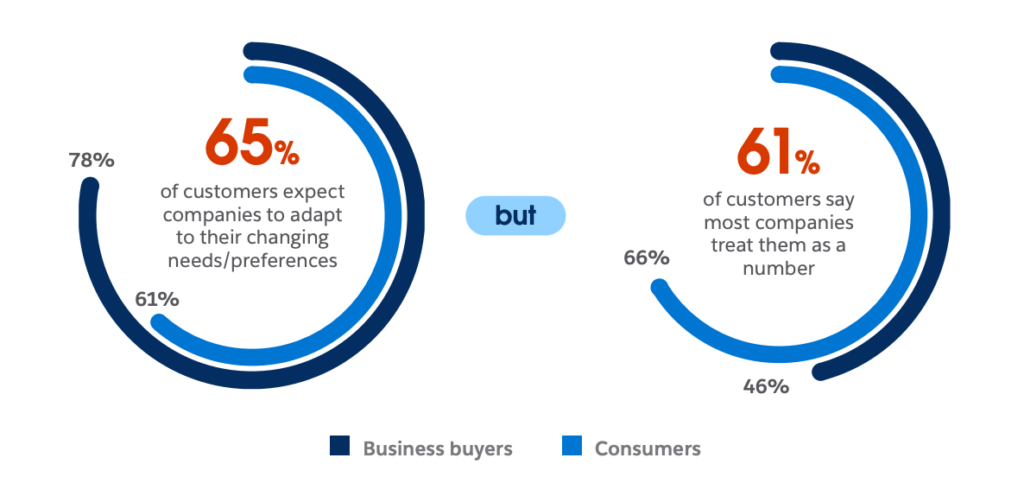

Hyper-personalisation

Hyper-personalisation is another key trend in fintech, with AI and open-source technologies enabling companies to create highly tailored financial products. This shift moves away from the traditional “one-size-fits-all” model, helping fintechs solve niche customer challenges and deliver more precise services.

Consumer demand for personalisation

A

Salesforce

survey found that 65% of consumers expect businesses to personalise their services, while 86% are willing to share data to receive more customised experiences.

source-

State of the connected customer

The expectation for personalised services is shaping how financial institutions approach customer engagement and product development.

Real-world examples

of open-source fintech

Companies like

Robinhood

and

Chime

leverage open-source tools to analyse user data and create personalised financial recommendations. These platforms use technologies like

Apache Kafka

and

Apache Spark

to process real-time data, improving the accuracy and relevance of their personalised offerings-from customised investment options to tailored loan products.

Implementing hyper-personalisation lets fintech companies strengthen customer relationships, boost retention, and increase deposits. By leveraging real-time, data-driven technologies, they can offer highly relevant products that foster customer loyalty and maximise value throughout the customer lifecycle. With the scalability and flexibility of open-source solutions, companies can provide precise, cost-effective personalised services, positioning themselves for success in a competitive market.

Erlang and Elixir: Open Source solutions for fintech applications

Released as

open-source in 1998

,

Erlang

has become essential for fintech companies that need scalable, high-concurrency, and fault-tolerant systems. Its open-source nature, combined with the capabilities of Elixir (which builds on Erlang’s robust architecture), enables fintech firms to innovate without relying on proprietary software, providing the flexibility to develop custom and efficient solutions.

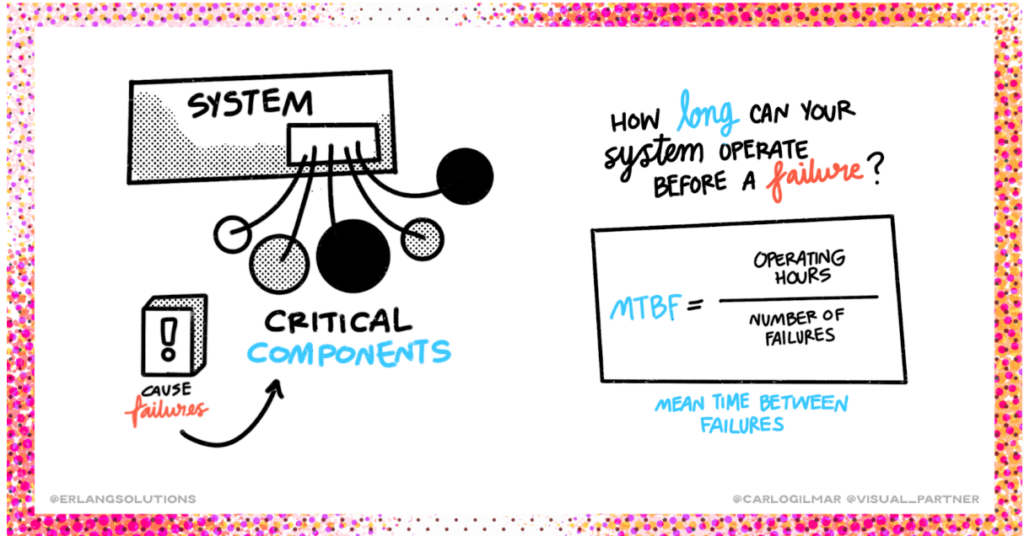

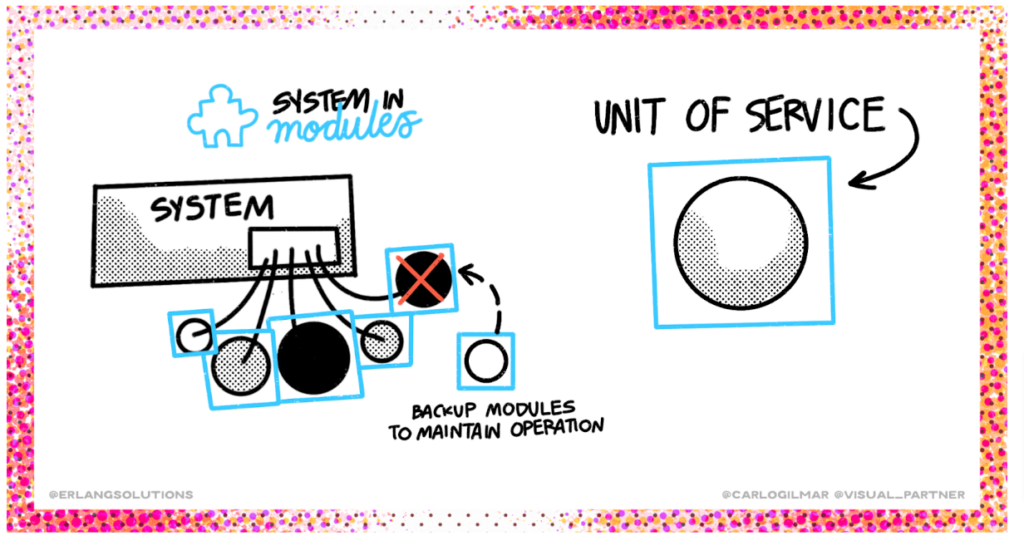

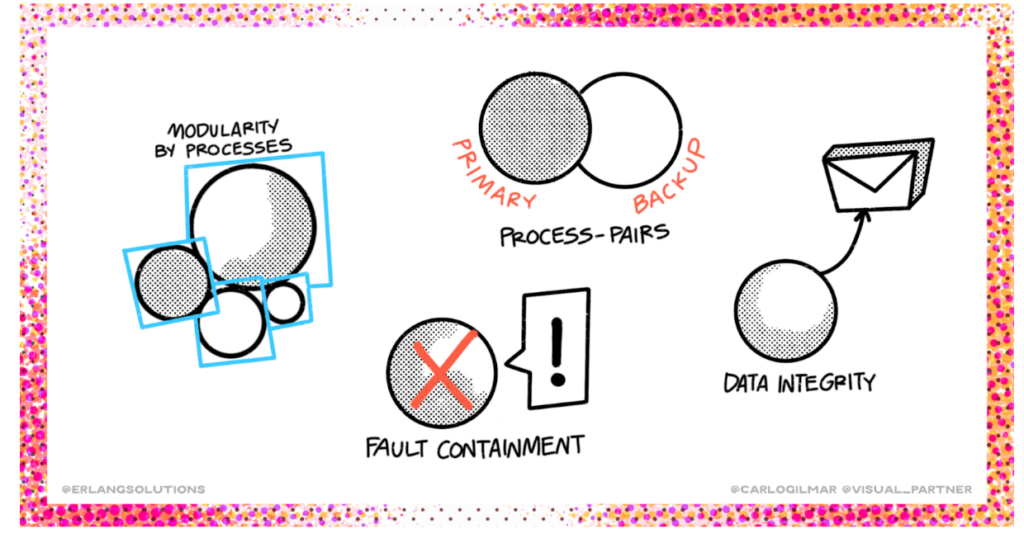

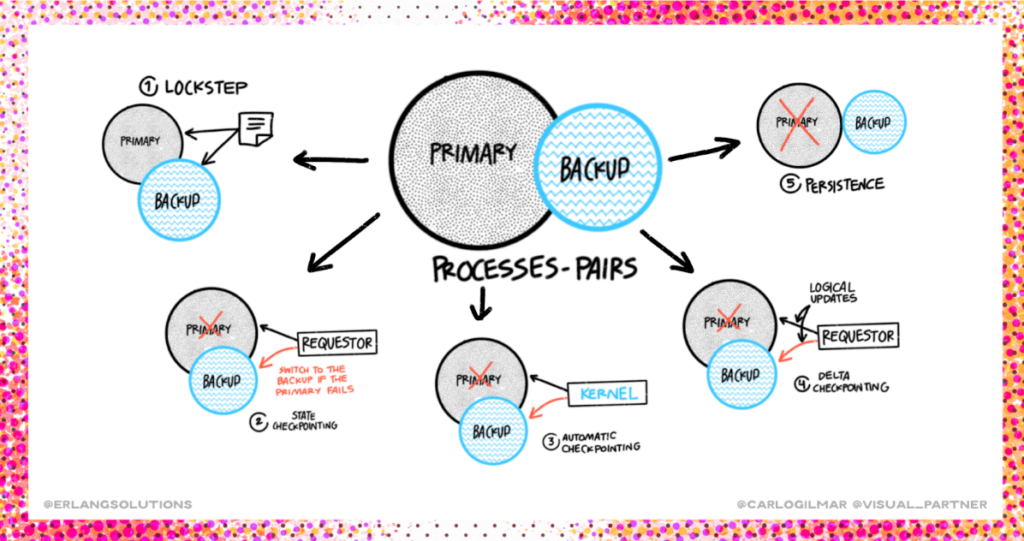

Both Erlang and Elixir’s architecture are designed to ensure potentially zero downtime, making them well-suited for real-time financial transactions.

Why Erlang and Elixir are ideal for Fintech:

-

Reliability

: Erlang’s and Elixir’s design ensures that applications continue to function smoothly even during hardware or network failures, crucial for financial services that operate 24/7, guaranteeing uninterrupted service. Elixir inherits Erlang’s reliability while providing a more modern syntax for development.

-

Scalability

: Erlang and Elixir can handle thousands of concurrent processes, making them perfect for fintech companies looking to scale quickly, especially when dealing with growing data volumes and transactions. Elixir enhances Erlang’s scalability with modern tooling and enhanced performance for certain types of workloads.

-

Fault tolerance: Built-in error detection and recovery features ensure that unexpected failures are managed with minimal disruption. This is vital for fintech applications, where downtime can lead to significant financial losses. Erlang’s auto restoration philosophy and Elixir’s features enable 100% availability and no transaction is lost.

-

Concurrency & distribution

: Both Erlang and Elixir excel at managing multiple concurrent processes across distributed systems. This makes them ideal for fintechs with global operations that require real-time data processing across various locations.

Open-source fintech

use cases

Several leading fintech companies have already used Erlang to build scalable, reliable systems that support their complex operations and real-time transactions.

-

Klarna

: This major European fintech relies on Erlang to manage real-time e-commerce payment solutions, where scalability and reliability are critical for managing millions of transactions daily.

-

Goldman Sachs

: Erlang is utilised in Goldman Sachs’ high-frequency trading platform, allowing for ultra-low latency and real-time processing essential for responding to market conditions in microseconds.

-

Kivra

: Erlang/ Elixir supports

Kivra’s

backend services, managing secure digital communications for millions of users, and ensuring constant uptime and data security.

Erlang and Elixir -supporting future fintech trends

The features of Erlang and Elixir align well with emerging fintech trends:

-

DeFi and Decentralised Applications (dApps)

: With the growth of decentralised finance (DeFi), Erlang’s and Elixir’s fault tolerance and real-time scalability make them ideal for building dApps that require secure, distributed networks capable of handling large transaction volumes without failure.

-

Hyperpersonalisation

: As demand for hyperpersonalised financial services grows, Erlang and Elixir’s ability to process vast amounts of real-time data across users simultaneously makes them vital for delivering tailored, data-driven experiences.

-

Open banking

: Erlang and Elixir’s concurrency support enables fintechs to build seamless, scalable platforms in the open banking era, where various financial systems must interact across multiple applications and services to provide integrated solutions.

Erlang

and

Elixir

can handle thousands of real-time transactions with zero downtime making them well-suited for trends like DeFi, hyperpersonalisation, and open banking. Their flexibility and active developer community ensure that fintechs can innovate without being locked into costly proprietary software.

To conclude

Fintech businesses are navigating an increasingly complex and competitive landscape where traditional solutions no longer provide a competitive edge. If you’re a company still reliant on proprietary software, ask yourself: Is your system equipped to expect the unexpected? Can your existing solutions keep up with market demands?

Open-source technologies offer a solution to these challenges. Fintech firms can reduce costs, improve security, and, most importantly, innovate and scale according to their needs. Whether by reducing vendor lock-ins, tapping into a vibrant developer community, or leveraging customisation, open-source software is set to transform the fintech experience, providing the tools necessary to stay ahead in a digital-first world. If you’re interested in exploring how open-source solutions like Erlang or Elixir can help future-proof your fintech systems, contact the

Erlang Solutions team

.

The post

Why Open Source Technologies is a Smart Choice for Fintech Businesses

appeared first on

Erlang Solutions

.